Abstract:

The success of the Next Generation Science Standards and the New York State Science Learning Standards depends on effective assessment of what students are learning. Conventional assessment methods do not provide teachers with practical, useful, and timely information on what students have learned and need to learn. Instead they generate aggregated scores which can only be used to compare and rank students. The information that is actually needed to plan and enhance instruction is information on how well students have attained specific learning goals. Teachers can and must play a critical role in obtaining and applying that information. This paper presents an approach that we call Practical Educational Science which places the teacher at the center of action research procedures that produce precisely the information they need to plan and enhance instruction of the NGSS/NYSSLS standards. Practical Educational Science is based on systematic study of what students have actually learned or not learned. Its procedures parallel those used in natural science and the social sciences but remain faithful to the practical needs and requirements of educational environments.

The Problem:

Information from classroom assessments is characterized by uncertainty, ambiguity, and unreliability. Moreover, this information is primarily used to generate grades and not to contribute to every day instruction. This is largely because, while we know how students perform on tests, we do not know specifically what each of them has learned and what they have not learned.

A systematic approach to addressing these problems can actually take the form of the science of education that John Dewey called for almost 100 years ago in his address to the International Honor Society in Education. Dewey posed the question — “Can there be a science of education…Are the procedures and aims of education such that it is possible to reduce them to anything properly called a science?” (Dewey, 2013, orig. 1929).

While scientific ideas have been applied and developed at the academic and theoretical level of education over the last century, we propose the application of scientific practices in the classroom itself to increase the dependability of our assessment results, and to obtain practical, useful, reliable and timely information on student attainment of NGSS/NYSLSS learning standards.

Essential Elements of Scientific Investigation:

Education need only look to the practices of natural scientists as a model for such an approach. While there is broad variation in the methods employed by scientists to gain new knowledge (NRC, 2012), they generally begin with a problem, or remarkable observation, which is translated into questions. Based on their disciplinary knowledge and their review of previous research in the field, scientists develop hypotheses that offer answers to their questions. Factors which might be causes and effects of a phenomenon or observation are identified. When measures are developed for these factors a process of mathematization begins. This results in the formation of variables. These variables can be expressed as independent variables, or those which are imagined to cause some outcome, while the measurement of an outcome itself is conceived of as a dependent variable. Experiments are designed and conducted to establish relationships between these variables of interest. Experiments conclude with data analysis, and dissemination of results and conclusions to the scientific community for peer review.

Natural Science as a model for Practical Educational Science:

The reality is that teachers already engage in quasi scientific inquiries all the time in their classrooms. However there are certain critical differences between what happens in a classroom and what happens in the natural sciences. There are also critical improvements that must be made in what is typically practiced in the classroom before we can consider it to be truly scientific. Let us begin by building an analogy between what happens in the natural sciences and what happens in the classroom.

In the natural sciences, research begins with observations of objects of interest which are typically natural phenomena. But what are the primary objects of interest in education? They are none other than the capabilities that we want our students to attain: the concepts, skills and dispositions represented by our learning goals. But these human capabilities themselves are generally not observable. In this they are more like gravity than they are like the color of the pea plant’s flower. Assessment is the instrument used to provide observable evidence of the degree to which these capabilities have been attained. The practical representations of educable human capabilities have many names: intended learning outcomes, instructional objectives, learning objectives, learning goals, and, more recently, standards. These terms can be used interchangeably although they may be used in specific ways in different contexts. The standards and performance expectations that make up the Next Generation Science Standards (NRC, 2013) and the New York State Science Learning Standards (NYSED, 2017) are systematic collections of such learning goals.

As teachers, we formulate hypotheses every time we plan an instructional intervention to help students attain one of these learning goals. What we are actually doing is imagining that the instruction that we intend to implement will result in student attainment of learning goals (e.g. standards). What happens next is more or less an experiment in which a treatment (instruction) is administered to a group of students in order to bring about a desired learning outcome.

In natural scientific experiments, great attention is paid to identifying and isolating the presumed causal factor represented by the independent variable. This is done in order to identify precisely which factor may be influencing the effect measured by the dependent variable. This is the scientific practice called control of variables. In education we are not capable of isolating the myriad factors that might affect the degree of attainment of a particular learning goal. Such factors include personality traits of students; their previous educational attainments; and numerous social, cultural and biological factors as well. There are; however, two sets of factors over which we as teachers have control — instruction and the qualities of our learning environment. We do not typically mathematize these factors as independent variables in our daily work, but we do manipulate them and always have hypotheses concerning how they will affect the attainment of learning outcomes.

Just as in the natural sciences, teachers collect data on the outcomes of their instructional experiment. Teachers do this through assessments. Assessments produce examples of student performance which serve as evidence of student attainment. It is what is done with that evidence that must evolve to truly reveal the state of each student’s capabilities and be the basis for a science of education.

Misaggregation — the chief threat to the usefulness of educational information

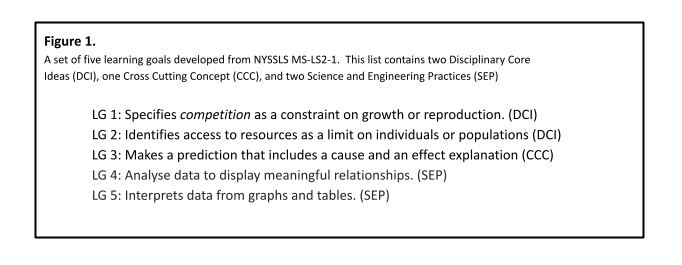

The true work of isolating and controlling variables in the field of education must be carried out in the realm of the dependent variable. Imagine now that we wish to study the effect of instruction on a set of intended learning outcomes drawn from one NGSS performance expectation. For example, consider the five learning goals unpacked from NGSS MS-LS2-1 in Figure 1:

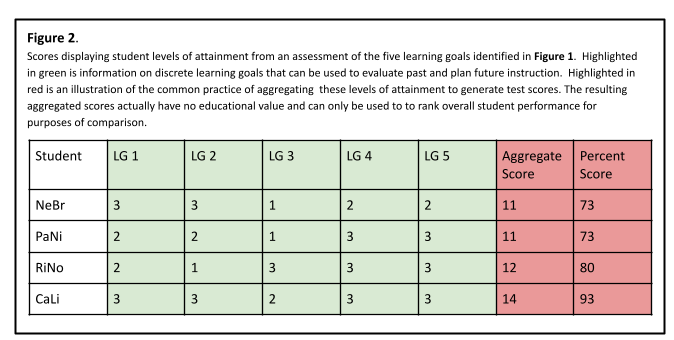

Imagine we create a lively assessment activity that can engage students and reveal their levels of attainment of these learning goals. We devise scales and rubrics to assess these levels of attainment. A common practice is to then devise a scheme for aggregating the individual scores for each learning goal to generate total scores for each student, perhaps so that the total score will come out to an even 100%.

But, what happens when we do this? In the process of combining the levels of attainment of the separate learning goals to generate a total score, the critical information needed to plan and evaluate teaching has been obliterated. A score of this kind obtained as a pre test is empty of the information actually needed to determine what instruction is needed by the students. Two students may achieve a score of 73, but underlying those identical test scores are completely different constellations of successful performance. Taken as a post test score the same loss of information is the case and there is no way to know from a score of 73 which capabilities might have been sensitive to the instructional treatment (the independent variable). See Figure 2.

This clumping of what should be information on distinct learning outcomes into a single score can be called misaggregation. What can we possibly do with the resulting score? Really only one thing — we can compare our students and see which had higher or lower scores and, unfortunately, use that information to affect students’ lives without being able to improve teaching or their learning. Misaggregation takes away the educational usefulness of the information that we so painstakingly created when designing, administering, and scoring our assessments.

What is truly educational information?

Educationally useful information is always and only in the form of how well a discrete learning goal has been attained. Only that information can be used to plan, evaluate and enhance the treatment factors that make up our instruction and educational environment. The central role of discrete learning goals as the basis for educational thinking and action was Mauritz Johnson’s great contribution to educational theory in the last third of the 20th century (1967, 1977). This allowed him to establish a precise understanding of the nature of curriculum and instruction. The power of the learning goal as a unifying concept has been extended further to provide a more productive conceptualization of assessment and evaluation. (Zachos & Doane, 2017).

NGSS and NYSLSS standards are simply broad sets of learning goals which are made more specific as performance expectations (PEs). Performance expectations may themselves contain several discrete learning goals that must be measured separately as distinctly different dependent variables. Figure 3 displays the components of the NGSS MS-LS2-1 Performance Expectation unpacked into a set of eight distinct learning goals.

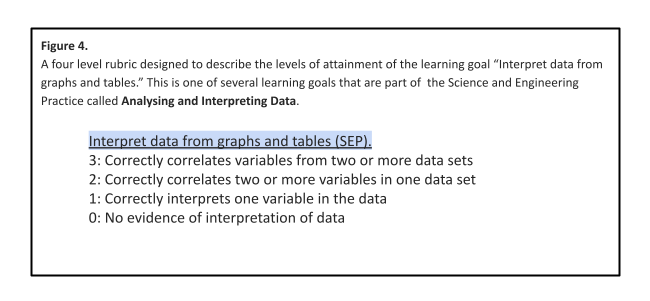

When scales of attainment and rubrics are developed, learning goals are mathematized and become the dependent variables of education experiments. Unlike the common use of rubrics in which multiple learning goals may be conflated within a single level of attainment, scales and rubrics that will produce precise and unambiguous information must have clearly defined levels of attainment distinct from those of any other learning goal. Levels of attainment, when clearly defined, show progressions of learning that are discernable from one another so that students’ capabilities may be measured just like centimeters on a ruler. See Figure 4.

The identification of discrete learning goals and specification of levels of attainment through rubrics is essentially the development of a measurement tool. In educational science that measurement tool is applied to examples of student performance which will serve as evidence of student attainment of the learning goal. That evidence is collected through assessment activities. These measurement activities can be applied to examples of student performance before, during or after instruction. The assessment activity and the rubric work together, both focused on the same goal of measuring student attainment of discrete learning goals.

What can be done with truly educational information?

With knowledge of how well each student has attained discrete learning goals teachers can make targeted decisions concerning instruction. Whole class instruction may need to take a new direction, students may be split into groups to receive instruction on a particular learning goal, students can effectively be assigned to help other students, and teachers can decide who needs individual instruction and design what that instruction needs to be. Likewise, students are empowered by knowing what they do and do not know. Their conversations with teachers shift from “What did I get on that test?” to “Can you help me meet this specific goal?”

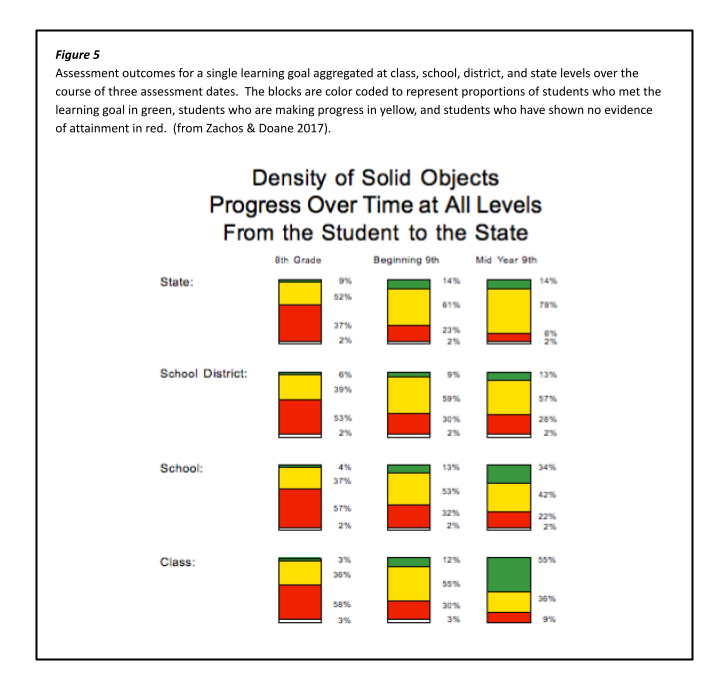

Interestingly, information that tells how well performance expectations have been attained can be aggregated in a meaningful way. Rather than comparing passing rates on assessments at the school, state, or national level, educators and policy makers can focus on the attainment of discrete learning goals at all levels of the educational hierarchy as shown in Figure 5. This allows educators to identify and treat specific weaknesses and leverage strengths in their educational institutions .

Summary:

Consider the critical difference between traditional grading practices and the Practical Educational Science approach. Typically when an assessment task is administered and scored a student is given a grade that masks information on how well the specific learning goals were attained. Standards based grading attempts to get meaningful information by reporting at the level of the standard. But as we have seen, standards are still complex, multifaceted structures, making it difficult to identify which specific learning goals a student has or has not attained. Practical Educational Science takes a more productive approach to assessment by identifying discrete learning goals and measuring student attainment of those goals. In doing this, we can pinpoint specific learning deficiencies, thereby modifying instruction or the educational environment to improve student achievement. The fundamental difference between the educational science approach and current educational assessment practices based on aggregated scores is that educational science reveals students’ levels of attainment of specific capabilities, and so permits more targeted responsive action.

Much of the rigorous educational research that has contributed to our understanding of teaching and learning has been carried out at institutions far removed from the P-12 science classroom. The Practical Educational Science approach that we have described here is no less scientifically rigorous than those research paradigms. It differs in that it is an intimate, immediately relevant action research activity,, involving the teacher and her students, one that can be enriched by collaboration with colleagues. Practical Educational Science speaks specifically to the learning goals that a teacher is or will be working on and generates results relevant to the curriculum, instruction, assessment and evaluation that are presently in play for that teacher and school.

A critical next step is to consider how teachers and schools can collaborate to systematically build, test and refine the assessment activities that they are creating for their students. This can be seen as a grassroots effort to build, test and refine classroom assessments for NGSS/NYSSLS. The goal of these classroom assessments would be to produce practical, useful, reliable and timely information on which specific standards and performance expectations students have actually attained. These collaborations can be carried out with the same level of creativity, quality control, fellowship and camaraderie that characterize teachers’ collaboration to build test items for New York State Regents examinations.

Towards this end we have developed a website where NGSS/NYSSLS assessment kits developed by schools and teachers are posted and vetted. We will also be taking part in workshops where the methods of Practical Educational Science can be studied and put into practice. More information on both of these can be found at NGSSRubrics.org.

Works cited

Dewey, J. (2013, orig. 1929) The Sources of a Science of Education. New York: Liveright.

Johnson, M. (1967). Definitions and Models in Curriculum Theory. Educational Theory, 17, 127-140.

Johnson, M. (1977). Intentionality in Education. Albany, NY: Center for Curriculum Research and Services, State University of New York at Albany.

National Research Council (2012). A Framework for K-12 Science Education:

Practices, Crosscutting Concepts, and Core Ideas. Washington, DC: The National

Academies Press. https://doi.org/10.17226/13165.

New York State Education Department (2016), New York State P-12 Science Learning Standards.

http://www.nysed.gov/common/nysed/files/programs/curriculum-instruction/p-12-science-learning-standards.pdf

National Research Council. (2013). Next Generation Science Standards: For States, By States. Washington, DC: The National Academies Press. https://www.nextgenscience.org/standards/standards

Zachos, P. & Doane, W. E.J. (2017) Knowing the Learner: A New Approach to Educational Information. Vermont: Shires Press.

Jason Brechko is a National Board Certified science teacher, NYS Master Teacher Emeritus, and ACASE associate. He currently enjoys teaching science at Glens Falls Middle School. He can be reached at jbrechko@gfsd.org.

518.480.8730

Glens Falls Middle School

20 Quade Street

Glens Falls, NY 12801

Paul Zachos PhD is Director of Research and Evaluation for the Association for the Cooperative Advancement of Science and Education (ACASE). His special interest is helping teachers, trainers, and educational institutions to assess and monitor growth in the attainment of valued learning outcomes. He can be reached at paz@acase.org.

518.583.4645

ACASE

110 Spring Street

Saratoga Springs, NY 12866